- Originally Published on September 8, 2025

The Rise of AI Sextortion: How Criminals Are Weaponizing Deepfakes

A new breed of online predator does not need your intimate photos to destroy your life. They can create them.

Sarah thought she was safe. As a college student, she had never sent nude photos or engaged in risky online behavior. That did not matter to the criminal who found her Instagram photos and, within minutes, transformed them into explicit deepfake videos. The message that followed was simple: pay $500, or these videos would be sent to everyone she knew.

Sarah’s case reflects a disturbing trend law enforcement has identified as an increase in AI-powered sextortion. Unlike older scams that relied on victims sending real intimate content, today’s schemes can target anyone with a social media presence.

What Makes AI Sextortion Different and Dangerous

Traditional sextortion followed a familiar pattern. Criminals built fake online relationships, pressured victims into sharing private photos, and then demanded payment to keep those images from being released. The crime took time, manipulation, and a victim’s cooperation.

AI has changed that model entirely. Modern “nudifier” apps can digitally alter a photo in seconds. Deepfake tools can paste someone’s face onto explicit videos. Voice cloning technology can generate fake audio recordings. The line between authentic and fabricated content has become increasingly difficult to spot.

At Minc Law, we are seeing cases where victims never created intimate material, yet find themselves blackmailed with realistic fake content. Even when victims know the content is fabricated, the fear, shame, and emotional toll are very real.

The scale has also grown dramatically. What once involved predators targeting victims one by one has evolved into organized operations. Criminal groups now scrape hundreds of images from social media, run them through AI generators, and launch mass extortion campaigns at scale.

The Technology Behind Modern Sextortion

The tools fueling this abuse are widely accessible. Many are marketed as entertainment apps and require little technical skill. A user uploads a photo, selects a template, and receives manipulated imagery within minutes.

More advanced operations use generative adversarial networks (GANs), which can create synthetic explicit content featuring real people’s faces. These systems analyze thousands of expressions and features to generate fakes convincing enough to fool most viewers.

Voice synthesis adds another weapon. Criminals can replicate voices from social media videos, phone calls, or even a short audio clip. Combined with deepfake video, they can create fabricated evidence of events that never happened.

The Economics of Digital Extortion

Sextortion has become a profitable criminal enterprise. The FBI estimates that perpetrators earn millions each year, with individual victims paying anywhere from a few hundred to several thousand dollars. Because the tools are cheap and the payments often demanded in cryptocurrency, the operating costs are minimal.

Criminal groups frequently operate from jurisdictions with limited law enforcement cooperation, making prosecution difficult. They rely on cryptocurrency for payments, encrypted platforms for communication, and automated systems to manage large pools of victims. What was once an individual crime has become an organized industry.

The Human Cost of AI-Enabled Abuse

Current statistics highlight the scope of the problem:

- The FBI reports handling thousands of sextortion cases annually, with AI playing a growing role.

- Over the past two years, NCMEC’s CyberTipline has received more than 7,000 reports related to GAI-generated child exploitation.

- A 2024 survey revealed that 1 in 10 minors say peers have used AI to generate nude images of other kids.

- In at least one confirmed case, a U.S. teenager died by suicide after scammers used an AI-generated nude image to blackmail them.

The Broader Impact of Digital Abuse

The damage extends far beyond individual victims. Families often experience deep stress when a loved one is targeted. Schools report heightened anxiety among students who fear being next. Communities question whether digital platforms are safe spaces for sharing personal content.

Mental health professionals describe treating victims who develop severe anxiety about being photographed, appearing online, or even leaving their homes. Some withdraw from social media entirely, limiting their educational and professional opportunities. Fear of being recognized from fabricated content can linger for years, damaging careers and personal relationships.

Challenges in Traditional Support Systems

Many victims struggle to find help. Law enforcement officers may lack training on deepfake technology. Counselors may not fully grasp the trauma of being targeted with synthetic content. Even family members often underestimate the harm, suggesting “everyone will know it’s fake” or “just ignore it.” These responses, though well-meaning, can leave victims feeling isolated and misunderstood.

Who Faces the Greatest Risk?

Teenagers Represent the Primary Target Demographic

Minors are the largest victim group, especially boys targeted in “financial sextortion” scams. Predators pose as romantic interests, generate fake sexual content, and demand payment in cryptocurrency or gift cards. The FBI has documented several teen suicides linked to these attacks.

Teens are especially vulnerable because they maintain broad online presences, may not recognize these new threats, and often feel too ashamed to tell adults. When fake images circulate in school, the humiliation can lead to bullying, social withdrawal, and academic disruption.

Women and Public Figures Face Heightened Exposure

Young women and public-facing individuals—social media influencers, journalists, and local professionals—are frequent targets. In 2024, a meteorologist discovered dozens of deepfake explicit videos of her likeness spread across multiple platforms.

For women in conservative industries or communities, even fabricated content can harm reputations and careers. Public figures face the added risk of widespread exposure and long-term harassment.

Men and LGBTQ+ Individuals Also Face Significant Risk

Men are increasingly targeted through “honey trap” scams where criminals threaten to release fake explicit videos to employers or family members. LGBTQ+ victims may face additional blackmail, including threats to “out” them, which can be devastating in unsupportive communities.

Virtually Anyone Can Become a Target

AI has lowered the barrier of entry. Parents have received fabricated explicit images of their children. College students have been threatened with fake content shared with professors or employers. Even seniors are targeted as criminals exploit perceived vulnerability.

Legal Developments and Law Enforcement Response

For years, victims had little legal recourse. That changed in May 2025 with passage of the TAKE IT DOWN Act.

The law requires platforms to remove reported non-consensual intimate content (including deepfakes) within 48 hours of a valid request. The Federal Trade Commission can impose civil penalties on platforms that fail to comply.

Individuals who publish or threaten to publish such content face fines and prison time, with enhanced penalties when minors are involved.

Federal Enforcement Initiatives

The FBI has increased its focus on AI-enabled crimes, issuing public warnings and dedicating resources within its cybercrime and child exploitation units. International operations have disrupted sextortion networks, though the global and anonymous nature of these crimes continues to pose major challenges.

Federal prosecutors are treating AI sextortion cases with the same seriousness as traditional extortion, recognizing that the harm to victims is no less devastating whether the content is real or fabricated. Sentences have included multi-year prison terms and significant financial penalties.

State-Level Legal Frameworks

Several states have moved to address AI-generated intimate content. Virginia, California, Texas, and Tennessee have all passed laws specifically targeting deepfake or synthetic sexual imagery. Other states, including Indiana, Florida, Washington, Maryland, and Michigan, have expanded existing “revenge porn” or sexual image statutes to cover AI-generated content.

Many of these laws provide victims with civil remedies, such as the ability to sue for damages related to emotional distress, reputational harm, and financial losses. Some states also allow for injunctive relief or expedited court orders to force content removal. In states without deepfake-specific statutes, prosecutors often apply existing non-consensual pornography laws to AI-generated cases.

Response Protocol for Victims

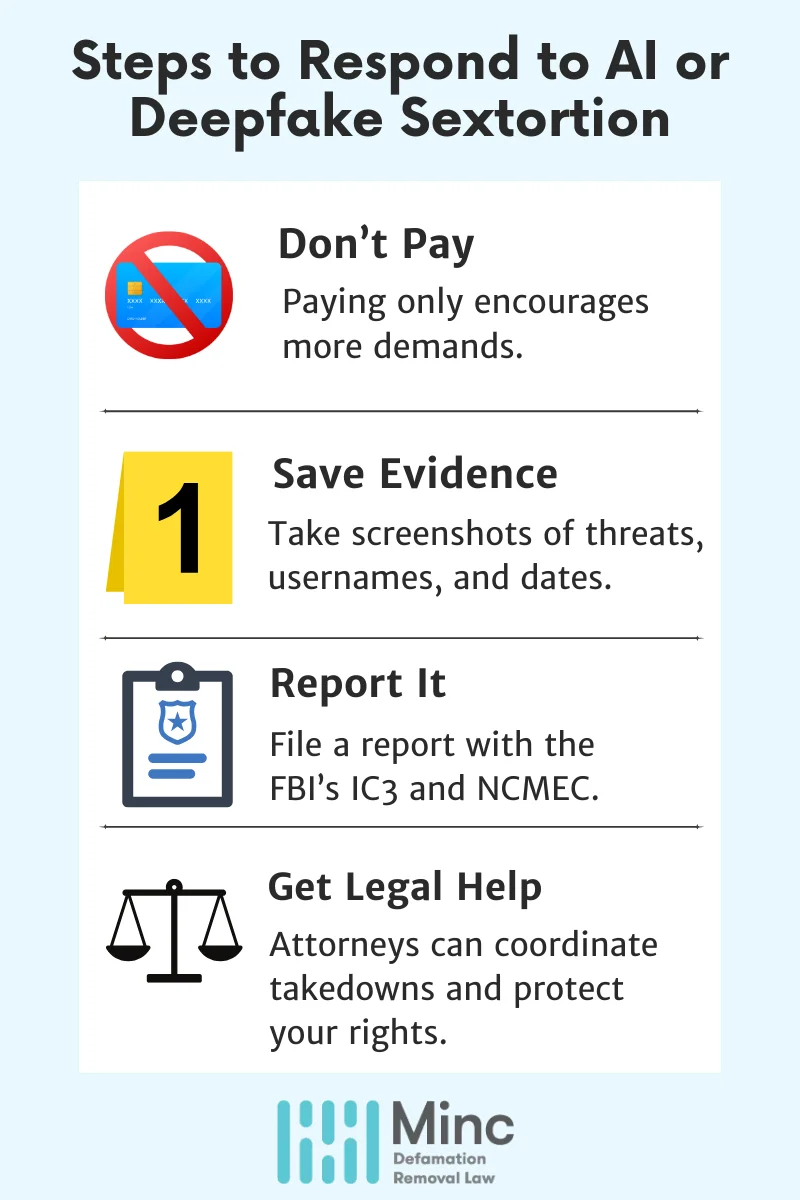

Maintain Composure and Do Not Pay

Your first instinct may be to pay and hope the problem disappears. Do not. Payment almost always leads to more demands and marks you as a profitable target.

Document Everything

Before cutting off communication, screenshot all messages, usernames, and threats. Record dates, times, and platforms. This evidence is crucial for law enforcement and legal action.

Report to Authorities

Contact local police and file a complaint with the FBI’s Internet Crime Complaint Center (IC3). If minors are involved, also report to the National Center for Missing & Exploited Children (NCMEC). Every report helps authorities track patterns and dismantle criminal networks.

Terminate Communication and Request Removals

Block the attacker and report their accounts. If fake content has been posted, request immediate removal. The Take It Down service from NCMEC can help minors and families remove intimate imagery.

Seek Professional Assistance

Legal and emotional support both matter. Attorneys experienced in cybercrime can help with takedowns and civil claims. Mental health professionals can address the trauma of victimization.

Legal Advocacy and Future Developments

At Minc Law, we have watched AI evolve from a promising tool into one increasingly exploited for abuse. We have also seen victims reclaim control through legal action, resilience, and growing public awareness.

The environment is shifting in favor of victims. New laws offer protections, law enforcement agencies are taking sextortion seriously, and platforms are improving their response systems. While the risk cannot be completely eradicated, it can be effectively managed through awareness, preparation, and timely action.

Technology will continue to evolve, but so will our ability to fight back through stronger laws, more effective detection tools, and increased awareness.

If you or someone you know is facing AI sextortion, remember: the crime lies with the perpetrator, never the victim. Seek help quickly, document everything, and don’t face it alone. With the right support and advocacy, it is possible to regain control and move forward.

Predators rely on silence. By speaking out, knowing your rights, and taking decisive steps, you can help ensure their tactics fail.

Get Your Free Case Review

Fill out the form below, and our team will review your information to discuss the best options for your situation.

This page has been peer-reviewed, fact-checked, and edited by qualified attorneys to ensure substantive accuracy and coverage.